How to Convert Long Videos into Short Clips Using AI in 2025

Learning how to Convert Long Videos into Short Clips is one of the most valuable skills for modern content creators. With audiences increasingly consuming short-form content on platforms like TikTok, Instagram Reels, and YouTube Shorts, the ability to efficiently repurpose longer videos has become essential for maintaining visibility across multiple platforms.

AI-powered tools have revolutionized the process to Convert Long Videos into Short Clips by automatically detecting the most exciting moments and generating ready-to-share videos in minutes. What once required hours of manual editing can now be accomplished in a fraction of the time, allowing creators to focus on producing new content rather than repetitively editing existing material.

This comprehensive guide explores the various AI approaches used to Convert Long Videos into Short Clips, from simple audio-driven selection to sophisticated multimodal transformers. Understanding these methods helps you choose the right tools for your specific content type and workflow requirements.

Understanding AI Approaches to Video Conversion

Multiple strategies can be used to Convert Long Videos into Short Clips effectively. Each approach has unique strengths depending on your content type, available resources, and desired output quality. Understanding these methods helps you select the most appropriate solution for your needs.

The first approach, and the one used in CLIPS, our long-to-short video editor at Repostit, is Hybrid Audio/Event-Driven Selection. This method is particularly suited for podcasts and videos that are more audio-focused than visual, as it highlights the most engaging moments based on audio cues combined with semantic content analysis.

Let’s explore each major AI approach in detail, examining how they work and when they’re most effective for helping you Convert Long Videos into Short Clips.

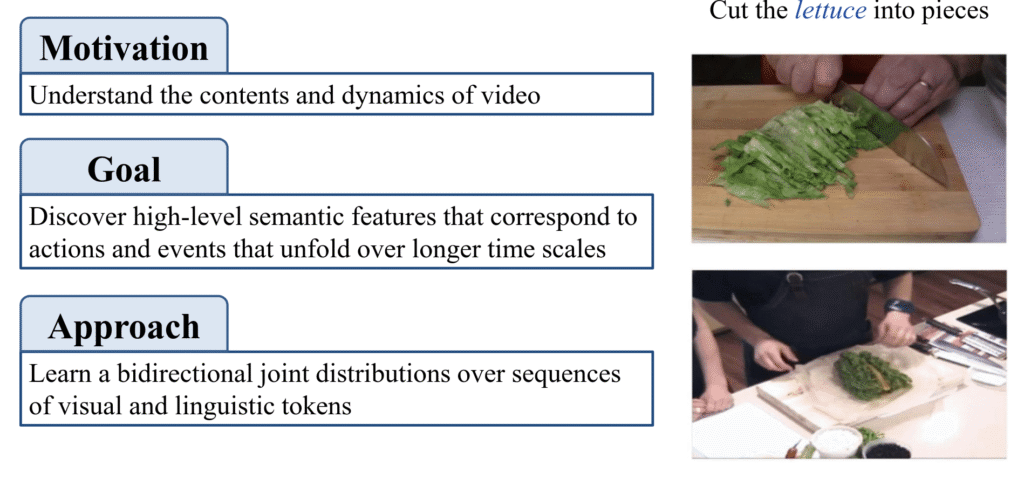

VATT: Video-Audio-Text Transformer

One of the most powerful AI approaches for automatically generating short videos from long-form content is multimodal transformers, which jointly model video, audio, and text. These sophisticated systems can effectively Convert Long Videos into Short Clips by understanding content across multiple modalities simultaneously.

A prime example of this technology is VideoBERT, which adapts the BERT architecture to learn a joint visual-linguistic representation for video sequences. This foundational model has influenced many subsequent approaches to automatic video summarization and clip generation.

How VideoBERT Works

The key idea behind VideoBERT, and similar architectures designed to Convert Long Videos into Short Clips, is to capture high-level semantic features that correspond to actions and events unfolding over longer time scales. This comprehensive understanding enables the system to identify truly meaningful moments rather than simply detecting visual changes or audio peaks.

The process works through several sophisticated steps:

- Video frames are converted into discrete visual tokens using vector quantization of spatio-temporal features

- Spoken audio is transcribed into text using automatic speech recognition (ASR)

- Both modalities are fed into a BERT-style bidirectional transformer

- The model learns the joint distribution over sequences of visual and linguistic tokens

- This joint understanding enables identification of the most meaningful segments

Evolution of Multimodal Models

Several new models have been developed based on this architecture while keeping the core structure intact. These advanced systems can effectively classify videos and automatically identify the best segments to create short clips. Models like HERO, UniVL, and MMT have extended these capabilities, making it increasingly practical to Convert Long Videos into Short Clips at scale.

The continuous improvement of these models means that automatic clip generation becomes more accurate and contextually aware with each new iteration. For content creators, this translates to higher quality output with less manual intervention required.

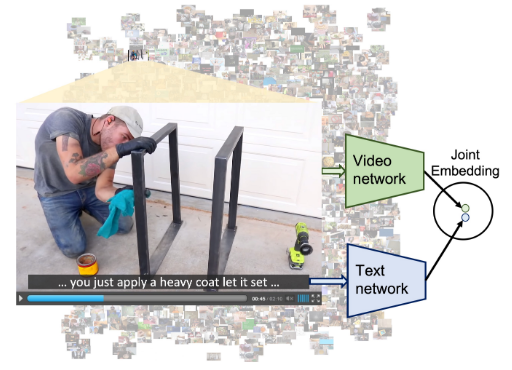

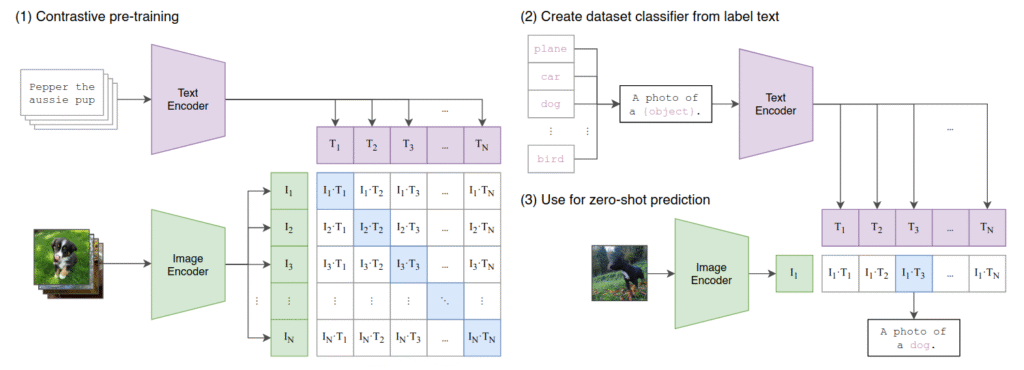

Contrastive CLIP-Style Retrieval for Zero-Shot Moment Scoring

Contrastive or CLIP-style retrieval is a powerful AI approach for automatically identifying the most interesting moments in a video without requiring task-specific training data. This flexibility makes it particularly valuable for creators who need to Convert Long Videos into Short Clips across diverse content types.

How CLIP-Style Retrieval Works

The key idea is to embed both visual frames or short video clips and text prompts into a shared semantic space. This joint embedding enables powerful zero-shot capabilities that can Convert Long Videos into Short Clips based on conceptual queries rather than predefined patterns.

Once embedded, candidate video segments can be ranked by similarity to target prompts such as:

- “Best tip” for educational content

- “Funny reaction” for entertainment videos

- “Product demo” for marketing content

- “Key insight” for interviews and podcasts

- “Emotional moment” for storytelling content

This enables zero-shot scoring, meaning the model can find relevant clips even if it hasn’t seen labeled examples of the specific task before. For creators who work with varied content types, this flexibility to Convert Long Videos into Short Clips without retraining is invaluable.

Segment Scoring and Selection

Once the segments are embedded, each clip can be compared to the text prompts using a similarity metric, typically cosine similarity. Segments with the highest similarity scores are selected as the most relevant moments for your short clips.

These scores can be combined with additional features to refine the selection process:

- Audio peaks that indicate excitement or emphasis

- Transcript relevance for spoken content

- Motion intensity for action-focused moments

- Face detection for human-centric content

- Scene change detection for visual variety

The top-scoring clips are then assembled into short videos, enabling automated, zero-shot generation of engaging content without manual intervention. This approach makes it remarkably efficient to Convert Long Videos into Short Clips at scale.

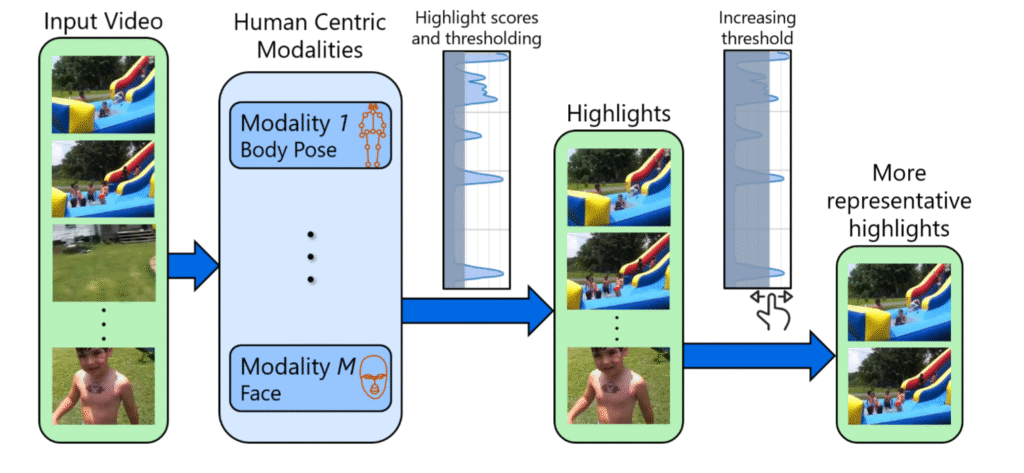

Supervised Engagement and Highlight Models

Supervised highlight detection methods leverage historical user engagement to identify the most compelling segments of videos. These approaches provide another powerful way to Convert Long Videos into Short Clips by learning directly from what has worked in the past.

Learning from Historical Signals

These approaches typically train classifiers or regressors to predict a “highlight score” using labeled data, such as past clips that achieved high viewership or watch-time. By incorporating multimodal features including video frames, transcripts, and visual embeddings, these models directly optimize for content that has historically performed well.

This makes supervised models particularly effective when sufficient engagement metrics are available. For example, HighlightMe specifically focuses on detecting highlights from human-centric videos, making it ideal for interview and vlog content.

Frame Scoring and Segment Assembly

When using supervised models to Convert Long Videos into Short Clips, the process follows a structured approach:

- Each frame receives a highlight score based on the trained model’s predictions

- Frames with higher scores are considered more “highlight-worthy”

- Consecutive high-scoring frames are grouped into segments to form coherent clips

- Each segment is ranked based on the average score of its frames

- Slight trimming or smoothing is applied to make clips visually natural

- The final output is a set of clips showcasing the most engaging moments

The result is automatic identification of significant or engaging moments in the original video, focusing specifically on human actions and interactions without requiring manual editing. This data-driven approach ensures that the clips generated are likely to resonate with audiences based on proven engagement patterns.

Hybrid Audio and Event-Driven Selection

Audio-driven selection is a highly effective AI approach for automatically generating short clips from long-form audio or video content. This method is particularly powerful for podcasters, musicians, and speakers who want to Convert Long Videos into Short Clips based on naturally occurring audio cues.

Identifying Audio-Based Engagement Signals

The key idea is to identify moments in the audio track that are naturally engaging, such as:

- Laughter indicating humor or entertainment value

- Applause signaling approval or key moments

- Musical beats and drops for music content

- Voice emphasis or excitement in speech

- Silence followed by impactful statements

- Audience reactions in live recordings

These audio events are used to center the clip, ensuring the most interesting or entertaining moments are captured. This approach works particularly well for podcasts, music videos, comedy shows, and talks where audio cues often signal the most compelling content.

Technical Implementation

Models for audio-driven selection typically rely on classifiers trained on large-scale datasets like AudioSet, which contains labeled examples of thousands of audio events. Beat detectors, laugh detectors, and applause detectors scan the audio waveform for peaks in these categories, enabling automatic identification of highlight moments.

This approach can be further enhanced by combining it with audio transcription using models like Whisper, which converts speech to text with remarkable accuracy. The resulting text segments can then be analyzed alongside the detected audio events, enabling more precise clip selection based on both auditory peaks and semantic content.

Why Hybrid Methods Excel

This hybrid method to Convert Long Videos into Short Clips is fast, reliable, and particularly suited for audio-first formats because it leverages inherent engagement signals from the audio while also incorporating meaningful textual cues. By combining audio event signals, transcription analysis, and temporal context, clips can be automatically generated that align with the most attention-grabbing portions of the content.

For podcast creators and interview-based content, the hybrid approach often produces superior results compared to purely visual methods because the most valuable moments are typically defined by what’s being said rather than what’s being shown.

Reinforcement Learning for Sequential Selection

Reinforcement learning (RL) provides a powerful approach for automatically generating short clips by treating clip selection as a sequential decision-making problem. This advanced method to Convert Long Videos into Short Clips offers unique advantages for optimizing content performance.

How Reinforcement Learning Approaches Clip Generation

Instead of selecting moments independently, an RL agent considers the temporal context of previous selections and aims to maximize a long-term reward. This reward can be based on engagement metrics like views, watch-time, or click-through rates, making RL particularly suited for optimizing for downstream key performance indicators (KPIs) rather than just immediate audio or visual saliency.

The Learning Process

In practice, the model interacts with the video or audio content sequentially, proposing clip boundaries or highlighting segments. The process works as follows:

- The agent observes the current state of the video content

- It proposes potential clip boundaries or segments

- A reward signal evaluates the selection based on historical data or real feedback

- The agent updates its policy to maximize future rewards

- Over time, it learns to balance content diversity, pacing, and attention-grabbing moments

This reward signal, either simulated based on historical data or obtained from real user feedback, is used to reinforce selections that lead to higher engagement. Over time, the agent learns a policy that effectively balances multiple factors to maximize overall performance when attempting to Convert Long Videos into Short Clips.

Key Research and Implementations

Key references for this approach include Deep Reinforcement Learning for Unsupervised Video Summarization (DSN) and related RL-based video summarization works. These demonstrate how RL can effectively learn to select highlights without requiring explicit human annotations for every segment.

While RL offers significant flexibility and potential performance gains for those wanting to Convert Long Videos into Short Clips, it is generally harder to train. Success requires careful reward design, exploration strategies, and often a simulated or partially observed environment to stabilize learning. For most content creators, the practical benefits may not justify the complexity compared to simpler approaches.

Cost Considerations for AI Clip Generation

Understanding the cost implications helps you choose the right approach to Convert Long Videos into Short Clips for your budget and needs. The cost depends on several factors including the model used, source video length, and infrastructure choices.

Lightweight Methods

Lightweight audio-driven selection methods and simple CLIP-style retrieval can be very fast and inexpensive. When run in batch on consumer-grade GPUs or cloud instances, these approaches often cost just a few cents per clip. For creators who need to Convert Long Videos into Short Clips frequently, these methods offer the best cost-to-value ratio.

Multimodal Transformers

More complex multimodal transformers like VideoBERT or VATT require significantly more compute as they process both video frames and audio/text embeddings simultaneously. Running these models in the cloud can cost anywhere from a few dollars to tens of dollars per clip, depending on:

- Video resolution and frame rate

- Source video length

- Batching efficiency

- Cloud provider pricing

- Model optimization level

Reinforcement Learning Approaches

Reinforcement learning-based sequential selection is generally the most resource-intensive approach because it involves simulating reward feedback over multiple potential clip sequences. Training an RL model has a high upfront cost, but once trained, generating new clips becomes relatively cheaper.

Overall, careful selection of models and optimization of pipeline components can significantly reduce costs while maintaining high-quality clips. For most creators looking to Convert Long Videos into Short Clips regularly, starting with audio-driven or CLIP-style methods provides the best balance of quality and affordability.

Practical Applications and Use Cases

Understanding when to use each approach helps you Convert Long Videos into Short Clips more effectively for your specific content type and goals.

Podcasts and Interviews

For podcast content, hybrid audio/event-driven selection typically produces the best results. The combination of speech transcription, audio event detection, and semantic analysis identifies the most quotable and shareable moments. This approach can Convert Long Videos into Short Clips that highlight key insights, funny moments, or controversial statements.

Educational Content

CLIP-style retrieval works well for educational videos where you want to extract specific topics or concepts. By using prompts like “key lesson” or “important example,” you can Convert Long Videos into Short Clips that serve as standalone educational moments for social media distribution.

Entertainment and Vlogs

Supervised engagement models excel for entertainment content where you have historical performance data. These models learn what makes content engaging based on past success, helping you Convert Long Videos into Short Clips that are statistically more likely to perform well.

Live Events and Performances

Audio-driven selection is ideal for concerts, comedy shows, and live events. Audience reactions like applause, laughter, and cheers naturally indicate the best moments, making it straightforward to Convert Long Videos into Short Clips that capture peak entertainment value.

Using Repostit to Convert Long Videos into Short Clips Automatically

If your goal is automation and efficiency, Repostit is built to simplify the entire process. Rather than manually downloading, editing, and uploading each video, Repostit can automatically Convert Long Videos into Short Clips using its powerful AI Video-to-Short feature. This tool analyzes your long-form content, extracts the most engaging moments, converts them to vertical format, and publishes them directly to platforms like Instagram Reels, TikTok, and YouTube Shorts, all without degrading quality.

For a detailed walkthrough of how this works with YouTube content specifically, see our complete guide on how to repost YouTube videos on Instagram.

Why Repostit Stands Out for Converting Long Videos into Short Clips

- AI-Powered Video-to-Short: Repostit’s AI analyzes your long-form video and automatically creates optimized short clips perfect for social media platforms when you Convert Long Videos into Short Clips.

- Automatic format conversion: Transform horizontal 16:9 videos into vertical 9:16 content optimized for short-form platforms without manual editing.

- True automation: Paste your video URL once, and Repostit handles everything after that so you can Convert Long Videos into Short Clips effortlessly.

- Quality preservation: Maintain high video quality throughout the conversion and posting process with no degradation.

- Auto-generated captions: Boost engagement with automatically added subtitles that make your content accessible and scroll-stopping.

- Multi-platform distribution: Publish your generated clips directly to Instagram, TikTok, YouTube Shorts, and more from one dashboard.

Step 1: Open the Repostit Dashboard and Paste Your Video URL

Sign in to your Repostit dashboard. This is the hub for all your automations. To Convert Long Videos into Short Clips, start by copying the URL of your source video from YouTube, Vimeo, or another supported platform. In the dashboard, navigate to the “Video to Short” feature and paste your link. Repostit will automatically fetch and analyze your video content, preparing it for AI-powered transformation into platform-ready shorts.

Step 2: Let AI Create Automatic Shorts from Your Video

Once you’ve pasted your video URL, Repostit’s AI engine gets to work. The Video-to-Short feature automatically analyzes your long-form content and identifies the most engaging moments to Convert Long Videos into Short Clips. The AI performs several intelligent operations:

- Scans your entire video for high-engagement moments using audio and visual analysis

- Automatically extracts the best 60-90 second clips optimized for short-form platforms

- Converts the horizontal 16:9 format to vertical 9:16 format intelligently

- Adds auto-generated captions to boost engagement and accessibility

- Creates multiple clip options for you to choose from

This AI-powered approach eliminates hours of manual editing work when you want to Convert Long Videos into Short Clips. The system understands which parts of your video will perform best on short-form platforms and creates multiple clips you can review and select from.

Step 3: Review, Customize, and Publish

After the AI generates your clips, you have full control over the final output. Review each clip Repostit has created to Convert Long Videos into Short Clips and select the ones that best represent your content. You can:

- Preview each generated clip before publishing

- Edit captions and hashtags for each platform

- Schedule posts for optimal engagement times

- Publish directly to multiple platforms simultaneously

- Save clips for later use or download for manual posting

This streamlined workflow means you can Convert Long Videos into Short Clips and distribute them across all your social media platforms in minutes rather than hours.

Frequently Asked Questions

How long does it take to Convert Long Videos into Short Clips using AI?

Processing time depends on the source video length and the AI method used. Lightweight audio-driven methods can process an hour of content in just a few minutes, while more complex multimodal approaches may take longer. Most practical tools can Convert Long Videos into Short Clips within 5 to 15 minutes for typical content lengths.

Do I need technical knowledge to use AI clip generation?

Not with modern tools. Platforms like Repostit handle all the technical complexity, allowing you to Convert Long Videos into Short Clips by simply uploading your content and letting the AI do the work. You can then review and select which clips to use without any programming or AI expertise.

Which AI method works best for podcast content?

Hybrid audio/event-driven selection typically works best for podcasts because it combines speech transcription analysis with audio event detection. This approach understands both what’s being said and how it’s being said, identifying moments that are both semantically interesting and emotionally engaging.

Can AI maintain video quality when creating clips?

Yes. AI clip generation tools simply identify and extract segments from your original video file without recompression in most cases. The output quality when you Convert Long Videos into Short Clips depends primarily on your source video quality and any final export settings you choose.

How many clips can I generate from a long video?

The number of viable clips depends on your content and settings. A 60-minute podcast might yield 10 to 20 engaging short clips, while a densely packed educational video could produce even more. Most tools allow you to adjust sensitivity settings to Convert Long Videos into Short Clips at different densities.

Is AI clip generation suitable for all video types?

AI methods work best for content with clear engagement signals like speech, music, or action. Highly visual content without audio cues may require vision-focused models. Most content types benefit from AI assistance to Convert Long Videos into Short Clips, though optimal results depend on matching the right AI approach to your specific content type.

Can I Convert Long Videos into Short Clips and post them automatically?

Yes. Tools like Repostit not only generate clips using AI but also handle the distribution process. Once you Convert Long Videos into Short Clips, you can schedule and publish them directly to Instagram Reels, TikTok, YouTube Shorts, and other platforms without manually uploading to each one.

Summary

Automatically generating short clips from long-form video content leverages a wide spectrum of AI models across different modalities. Understanding these approaches helps you Convert Long Videos into Short Clips more effectively for your specific needs.

Key approaches covered in this guide:

For audio-only approaches, tools like Whisper for speech-to-text and AudioSet classifiers are commonly used, while embeddings from models like VGGish, YAMNet, or OpenL3 can capture music, sound events, and emotional cues.

Vision-only models remain relevant for classic video summarization tasks. Models like VSUMM, DR-DSN, or Unsupervised Keyframe Extraction Networks can generate highlight clips without requiring complex multimodal input.

Beyond VideoBERT and VATT, multimodal transformers such as HERO, UniVL, and MMT enable joint modeling of video, audio, and text, making them highly effective for highlight detection, summarization, and retrieval tasks.

Self-supervised or contrastive video representation models like VideoCLIP, X-CLIP, and ActionCLIP extend the CLIP-style retrieval approach to video, embedding short segments for semantic similarity scoring and zero-shot clip selection.

Reinforcement learning and sequential selection models provide another powerful avenue by treating clip generation as a decision-making problem. DSN, SUM-GAN-RL, and DRL-SV explicitly optimize sequential selection using reward signals, aiming to maximize engagement metrics like views, watch-time, or click-through rates.

Finally, generative video models including diffusion-based or video-to-video synthesis approaches offer emerging capabilities to automatically transform or condense content, opening new possibilities for creating visually coherent short clips beyond simple extraction.

For creators who want practical results without diving into the technical details, Repostit offers a streamlined solution that puts these AI capabilities at your fingertips. With the Video-to-Short feature, you can Convert Long Videos into Short Clips in minutes and distribute them across all major social platforms automatically.

Together, these models and tools form a rich ecosystem for automating long-to-short video conversion, enabling more efficient production of engaging content across platforms. Whether you’re a podcast creator, educator, entertainer, or marketer, AI makes it possible to Convert Long Videos into Short Clips at scale without sacrificing quality.

Related Resources

Continue learning about video content creation and distribution with these helpful guides:

- How to Repost YouTube Videos on Instagram Complete guide to repurposing YouTube content for Instagram Reels.

- How to Cross-Post Short Videos Across All Platforms Learn automated distribution strategies for your clips.

- Best Times to Post Short-Form Videos in 2025 Optimize your posting schedule for maximum engagement.

- How to Remove Video Watermarks Automatically Create clean, professional-looking content for cross-posting.

Research Papers and Technical Resources

- VideoBERT Paper Original research on joint visual-linguistic representation for video.

- CLIP Paper Foundational research on contrastive language-image pre-training.

- Deep Reinforcement Learning for Video Summarization Research on RL-based approaches to video highlight detection.